|

[

About |

@Home |

People |

Projects |

Papers |

Mailing List |

Links |

News

]

Biota.org

|

|

|

|

Andrew Phelps' Projects |

Many thanks for the opportunity to interview you for Biota.org. Your work links Alife, multi-user/avatar worlds and game development. For those not familiar with your work, could you please give some background on how you came to your present research?

Well, I actually started out as a painter. While I was in art school, someone showed me this thing called 'computer art' which really meant animation, so I started doing that. After I messed around with Alias for a while, I decided that I didn't really want to make movies; I wanted to make interactive things, which meant games. So I've been working in games for a while, and the most interesting thing happening in games, to me, are these massively multi-player online (MMO) worlds. But there is a huge problem in MMOs in that the social interactions are very rich, but the player/world interactions are very static and very poor. I look to Alife as a way to have the entire world evolve with and around the people in it, rather than remaining this kind of backdrop of static scenery.

There is a strong link between multi-user/avatar worlds and Alife development. There is a shared interest in creating virtual environments, but there seems to be something far stronger. What are your thoughts on this link? What can Alife developers learn from avatar world development? Well, first off, I think that you are correct in pointing out the link. A lot of folks disagree about that actually – some folks think you are sort of contaminating your virtual Petri dish if you let people walk through it all day and tinker with stuff. But in any event, I think the connection is one of complexity – so many things happen in collaborative virtual environments, and especially games – social networks, reputation trees, collaborative play, emergent rule sets, etc. When you look at how rich the interaction is amongst the people, it provides a very interesting space to say 'hey, how can the world interact with the people, how can players leave a mark on the evolution of this space?' To me, that is a very powerful way of thinking about the field. Computer game developers seem very interested in contemporary Alife development. What aspects of Alife can game developers learn from? How receptive is commercial game development to the work produced by Alife developers? This is a pretty up-and-coming area. For a long time, games were using really rudimentary AI, because the number of cycles that could be devoted to AI was a really small slice of the pie. Most of the processing power went to graphics, and after graphics then to physics, etc. But recently graphics has been offloaded to a GPU, I've seen the first physics chip publicly announced, and plus with all the multi-core hardware we're entering a space where AI will really get some attention. Given this trend, it seems clear that game AI people are interested in more than one approach. But Alife holds a very special niche in games development because it is a very promising approach to allowing a player to individualize their play experience. If, through either successive generation attempts, or through other means, a computer can learn how it is being played against, this could have a great impact on game play! But the thing that has to happen is that Alife has to be adapted and condensed down to a game-usable form. Right now, things take to long, and they are too 'quirky'. And the purists would say 'well, that's how life is!' And they are right. But to be usable in a game, an approach has to operate much faster, and produce results within some expected tolerance. The golden rule of game development is 'if it looks right and plays right, then it is right.' But Alife sometimes has a way of producing off-the-wall results that, while very interesting, might not be yet suitable for commercial games development in some cases. You teach a course on Game Engine Architecture and Design. What can Alife developers do to create and popularize Alife related tools that could be utilized in game development? What tools are missing from contemporary game development, that Alife developers could create? I teach that course with Chris Egert, also on the faculty here. I think the biggest thing missing from games development at the moment, with regard to the question above, is an SDK for AI/Alife integration. Graphics gives you tools to build on top of, OpenGL, DirectX, etc. Physics gives you Havok. AI is still so specialized on a 'per-game' basis that it's a custom job almost every time. Most people script AI with a higher level language against the rest of their C/C++ engine. That's great, but it's still very custom based on the intended usage in-game. I think it would be a great leg up if someone could come up with a generic 'Alife engine' that allowed a game developer to plug in all kinds of tunable attributes and such for a variety of entities, in much the same way that GL doesn't dictate what you have to draw, but it tries to help you out if you are doing common graphics tasks. (Note that what I'm not talking about here is a way of visualizing these things – game developers have great tools at their disposal for drawing all manner of things.) Maybe such a package is out there, and I just haven't found it. But most of the work I see is still very low-level and specific. And that isn't a knock on that kind of work, I just don't think it will see wide-scale adoption in commercial games until its better defined and more accessible.

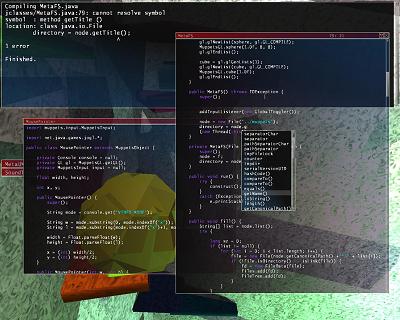

Looking through the documentation and movies online about MUPPETS it seems like an amazing interface for experimentation. Can you give a definition and some background to the MUPPETS development? M.U.P.P.E.T.S. (muppets.rit.edu) never ceases to amaze me. It is so far beyond my original expectations. It's similar to Alice and Second Life and a few others, but I think it has a totally different flavor because it uses a standard object-oriented language and compiler right inside the world for run-time extensibility (Java or C#). So it's this 3D environment similar to a standard MMORPG, except that at any time, and without leaving the world, you can call up and IDE window and create more objects, new programs, etc. in Java or C#. Which is really, really cool. It's become our play-space for testing out all kinds of ideas. Students have made games, interactive art, Alife, and virtual theatre performances, all inside of MUPPETS. Meanwhile, I'm still amazed that it even runs. We started building M.U.P.P.E.T.S. 3 years ago, as a tool to use in teaching freshman programming. The theory was really pretty simple: freshman come in motivated to build games and 3D things similar to the stuff they are playing in high school, but when they get here there is this big disconnect because we have them develop text-based applications in a shell. We wanted to build a system where a novice programmer could write a few lines of code and add something to a shared 3D environment. Fast forward to now, and we're using it not only for programming education, we use it as a case study for graphics class and as a base for all kinds of tools. We recently did some work with molecular visualization with the RIT College of Science. Having a world that you can create, test, and destroy code from within the environment is a really powerful thing, a lot more powerful than we gave it credit for at the outset. I should also point out here that we're very grateful for the funding we've obtained that has allowed us to continue building the system, first from RIT itself, and then most recently from Microsoft Research. Now MUPPETS is live, how have you seen the environment change? Are there particular examples of user-created content in MUPPETS that has gone beyond what you imagined as the project's creator? Well, part of this is covered above. But there have been so many great things created by students. A motorcycle racing game, big animated robots, all the 'game geek' stuff. But there have been a few really off-the-wall things as well. We had a student who was taking Japanese so he programmed the system that every time he logged in Japanese characters would start falling from the sky, and he had to type the equivalent before they hit the ground or they would explode. I realize that similar things have been done before, but he did it in an hour, and it was fully immersive in 3D, with some great effects. Then he used it to skill drill for the next week for his mid-term. One nice thing is that every piece of in-world content, unless deleted by its author or flagged private, becomes a public part of the world. So as people invent new things, the world grows. In that sense, I have absolutely no idea where it will go – it is up to the world's inhabitants, of which I am only one. Is there any scope for Alife in the MUPPETS environment? Absolutely, and this is something I'd like to explore further. We had a system for a while that a student wrote that had these little fish swarming around based on some genetic algorithms. They had to eat and if they got close enough together and were in the right mood they would procreate. Obviously they died off if they either didn't eat or lived long enough. Their digital DNA encoded a gatherer pattern to find food. You could sit in the world and watch the emergent strategy for how the flock of fish would school from food source to food source. This was pretty interesting, but it was also relative simple (I think it was done for an intro course on genetic algorithms). It points to the power of the tool in that it can be 3D and immersive almost instantly, but it still didn't really involve the user in any substantial way. There certainly seems to be opportunity to run some experiments that really target Alife/player interaction within the world, and that is something we've really only scratched the surface of. And then you get into this great area of how the evolution of the world and the Alife within it effect the narrative of what the user experiences. That's something I've been into for a long time.

What is your sense of the broader Alife community? Sadly I don't really know. I've met some great people in the space, I remember one time we were all at the Winchester Mystery House for some reason, as a part of one of the conferences. Bruce is always supportive, I love running into him various places. I remember I ran into him in France once – traveled a very great distance to see someone I usually see in California. I've been focused very internally as of late, building courses and degrees within RIT. It's important work, but it hasn't allowed me to stay as current with the external community as I would like. I'm hoping to 'get back out there' with y'all soon. That said, I would say that all the folks I've ever met at Biota or really anything that is Alife related in general have been pretty open-minded and inquisitive. These are traits I hold in high esteem. What more would you like to see with the Alife community? Like I said, producing some kind of public-use Alife SDK would be an amazing thing, particularly if it was picked up for use in some commercial venture. But just working on something like that as a community would, I think, be a very neat experience. Any final thoughts? Just that I think this is a very interesting field. It has the potential to really change the way people interact with computers in several ways, and I think the games space will be at the forefront of the shift. Some folks have a tendency to discount games as toys or a relatively trivial experience – I would encourage anyone who is truly interested in the intersection of Alife and avatar-based worlds to look closely at what is happening in the Massively Multi-player Online games, it's a very important interconnection. Many thanks for the opportunity to talk with you. The interview was taken by Biota.org's Tom Barbalet via email on February 9th, 2006. |

|

|

|

Maintained by

Featuring the PodCast, Ape Reality